|

|

Post by bluatigro on Jun 17, 2018 8:46:41 GMT

this i want to learn for a long time

i tryed it many times

first step :

perseptron for recoginising a < b

it works and learns

the mistakes are +-15%

if it did not work it wood be +-50%

'' bluatigro 17 jun 2018

'' perseptron

global inmax , uit

inmax = 2

dim x( inmax ) , w( inmax )

x( 0 ) = 1 ''bias

''init weights

for i = 1 to inmax

w( i ) = rnd( 0 )

next i

''training

for i = 0 to 1000

x( 1 ) = rnd( 0 )

x( 2 ) = rnd( 0 )

wish = x( 1 ) < x( 2 )

call train wish

print abs( wish - uit )

next i

print "[ test perseptron ]"

for i = 0 to 1000

x( 1 ) = rnd( 0 )

x( 2 ) = rnd( 0 )

call calc

if x( 1 ) < x( 2 ) xor uit > .5 then

fout = fout + 1

end if

next i

print fout / 10 ; " % error"

print w( 0 ) , w( 1 ) , w( 2 )

end

sub calc

sum = 0

for i = 0 to inmax

sum = sum + x( i ) * w( i )

next i

uit = 1 / ( 1 + exp( 0 - sum ) )

end sub

sub train wish

call calc

mistake = wish - uit

for i = 0 to inmax

w( i ) = w( i ) + 0.01 * mistake * x( i )

next i

end sub

|

|

|

|

Post by bluatigro on Jun 17, 2018 9:21:47 GMT

update :

step 2 starting whit a 1 hidden layer

'' bluatigro 17 jun 2018

'' neural net whit 1 hidden layer

global inmax , hmax , uitmax

inmax = 2

hmax = 3

uitmax = 1

dim x( inmax ) , xh( inmax , hmax ) , h( hmax )

dim huit( hmax , uitmax ) , uit( uitmax )

dim wish( uitmax )

x( 0 ) = 1 ''bias

h( 0 ) = 1 ''bias

''init weights

for i = 1 to inmax

for h = 0 to hmax

wh( i , h ) = rnd( 0 )

next h

next i

for h = 0 to hmax

for u = 0 to uitmax

hu( h , u ) = rnd( 0 )

next u

next h

''training

for i = 0 to 1000

for in = 1 to inmax

x( in ) = rnd( 0 )

next in

call train

mistake = 0

for u = 0 to uitmax

mistake = mistake _

+ abs( uit( u ) - wish( u ) )

next u

print mistake

next i

print "[ test neural net ]"

for i = 0 to 10

for in = 1 to inmax

x( in ) = rnd( 0 )

next in

mistake = 0

call calc

for u = 0 to uitmax

mistake = mistake _

+ abs( uit( u ) - wish( u ) )

next u

print mistake

next i

end

function signiod( x )

signoid = 1 / ( 1 + exp( 0 - x ) )

end function

sub calc

for h = 0 to hmax

sum = 0

for i = 0 to inmax

sum = sum + x( i ) * xh( i , h )

next i

h( h ) = signoid( sum )

next h

for u = 0 to uitmax

sum = 0

for h = 0 to hmax

sum = sum + h( h ) * hu( h , u )

next h

uit( u ) = signoid( sum )

next u

end sub

sub train

call calc

end sub

|

|

|

|

Post by bluatigro on Jun 17, 2018 9:39:53 GMT

update :

now whit a spimple test problem

'' bluatigro 17 jun 2018

'' neural net whit 1 hidden layer

global inmax , hmax , uitmax

inmax = 2

hmax = 3

uitmax = 1

dim x( inmax ) , xh( inmax , hmax ) , h( hmax )

dim huit( hmax , uitmax ) , uit( uitmax )

dim wish( uitmax )

x( 0 ) = 1 ''bias

h( 0 ) = 1 ''bias

''init weights

for i = 1 to inmax

for h = 0 to hmax

wh( i , h ) = rnd( 0 )

next h

next i

for h = 0 to hmax

for u = 0 to uitmax

hu( h , u ) = rnd( 0 )

next u

next h

''training

for i = 0 to 1000

mistake = 0

for a = 0 to 1

x( 1 ) = a

for b = 0 to 1

x( 2 ) = b )

for c = 0 to 1

x( 3 ) = c

wish( 1 ) = a xor b xor c

call train

for u = 0 to uitmax

mistake = mistake _

+ abs( uit( u ) - wish( u ) )

next u

next c

next b

next c

print mistake

next i

print "[ test neural net ]"

mistake = 0

for a = 0 to 1

x( 1 ) = a

for b = 0 to 1

x( 2 ) = b )

for c = 0 to 1

x( 3 ) = c

wish( 1 ) = a xor b xor c

for u = 0 to uitmax

mistake = mistake _

+ abs( uit( u ) - wish( u ) )

next u

next c

next b

next a

print mistake

end

function signiod( x )

signoid = 1 / ( 1 + exp( 0 - x ) )

end function

sub calc

for h = 0 to hmax

sum = 0

for i = 0 to inmax

sum = sum + x( i ) * xh( i , h )

next i

h( h ) = signoid( sum )

next h

for u = 0 to uitmax

sum = 0

for h = 0 to hmax

sum = sum + h( h ) * hu( h , u )

next h

uit( u ) = signoid( sum )

next u

end sub

sub train

call calc

end sub

|

|

|

|

Post by bluatigro on Jul 8, 2018 5:44:25 GMT

update :

fount a example in c++

i translated it

error :

syntacks error ?

'' http://computing.dcu.ie/~humphrys/Notes/Neural/Code/index.html

'' input I[i] = any real numbers ("doubles" in C++)

'' y[j]

'' network output y[k] = sigmoid continuous 0 to 1

'' correct output O[k] = continuous 0 to 1

'' assumes throughout that all i are linked to all j, and that all j are linked to all k

'' if want some NOT to be connected, will need to introduce:

'' Boolean connected [ TOTAL ] [ TOTAL ];

'' initialise it, and then keep checking:

'' if (connected[i][j])

'' don't really need to do this,

'' since we can LEARN a weight of 0 on this link

''test data

global NOINPUT : NOINPUT = 1

global NOHIDDEN : NOHIDDEN = 30

GLOBAL NOOUTPUT : NOOUTPUT = 1

'' I = x = double lox to hix

global lox : lox = 0

global hix : hix = 9

'' want it to store f(x) = double lof to hif

global lofc : lofc = -2.5 '' approximate bounds

global hifc : hifc = 3.2

GLOBAL RATE : RATE = 0.3

GLOBAL C : C = 0.1 '' start w's in range -C, C

GLOBAL TOTAL : TOTAL = NOINPUT + NOHIDDEN + NOOUTPUT

'' units all unique ids - so no ambiguity about which we refer to:

global loi : loi = 0

global hii : hii = NOINPUT - 1

global loj : loj = NOINPUT

global hij : hij = NOINPUT + NOHIDDEN - 1

global lok : lok = NOINPUT + NOHIDDEN

global hik : hik = NOINPUT + NOHIDDEN + NOOUTPUT - 1

'' input I[i] = any real numbers ("doubles" in C++)

'' y[j]

'' network output y[k] = sigmoid continuous 0 to 1

'' correct output O[k] = continuous 0 to 1

'' ssumes throughout that all i are linked to all j, and that all j are linked to all k

'' if want some NOT to be connected, will need to introduce:

'' Boolean connected [ TOTAL ] [ TOTAL ];

'' initialise it, and then keep checking:

'' if (connected[i][j])

'' don't really need to do this,

'' since we can LEARN a weight of 0 on this lin

dim in( TOTAL )

dim y( TOTAL )

dim O( TOTAL )

dim w( TOTAL , TOTAL ) '' w[i][j]

dim wt( TOTAL ) '' bias weights wt[i]

dim dx( TOTAL ) '' dE/dx[i]

dim dy( TOTAL ) '' dE/dy[i]

call init

call learn 1000

call exploit

print "[ game over ]"

end

''How Input is passed forward through the network:

function signoid( x )

signoid = 1 / ( 1 + exp( 0 - x ) )

end function

sub forwardpass

''----- forwardpass I[i] -> y[j] ------------------------------------------------

for j = loj to hij

x = 0

for i = loi to hii

x = x + in( i ) * w( i , j )

next i

y( j ) = sigmoid( x - wt( j ) )

next j

''----- forwardpass y[j] -> y[k] ------------------------------------------------

for k = lok to hik

x = 0

for j = loj to hij

x = x + ( y( j ) * w( j , k ) )

y( k ) = sigmoid( x - wt( k ) )

next j

next k

end sub

''Initialisation:

'' going to do w++ and w--

'' so might think should start with all w=0

'' (all y[k]=0.5 - halfway between possibles 0 and 1)

'' in fact, if all w same they tend to march in step together

'' need *asymmetry* if want them to specialise (form a representation scheme)

'' best to start with diverse w

''

'' also, large positive or negative w -> slow learning

'' so start with small absolute w -> fast learning

function range( l , h )

range = rnd * ( h - l ) + l

end function

sub init

for i = loi to hii

for j = loj to hij

w( i , j ) = range( -C , C )

next j

next i

for j = loj to hij

for k = lok to hik

w( j , k ) = range( -C , C )

next k

next j

for j = loj to hij

wt( j ) = range( -C , C )

next j

for k = lok to hik

wt( k ) = range( -C , C )

next k

end sub

''How Error is back-propagated through the network:

sub backpropagate

''----- backpropagate O[k] -> dy[k] -> dx[k] -> w[j][k],wt[k] ---------------------------------

for k = lok to hik

dy( k ) = y( k ) - O( k )

dx( k ) = ( dy( k ) ) * y( k ) * ( 1 - y( k ) )

next k

''----- backpropagate dx[k],w[j][k] -> dy[j] -> dx[j] -> w[i][j],wt[j] ------------------------

''----- use OLD w values here (that's what the equations refer to) .. -------------------------

for j = loj to hij

t = 0

for k = lok to hik

t = t + ( dx( k ) * w( j , k ) )

next k

dy( j ) = t

dx( j ) = dy( j ) * y( j ) * ( 1 - y( j ) )

next j

''----- .. do all w changes together at end ---------------------------------------------------

for j = loj to hij

for k = lok to hik

dw = dx( k ) * y( j )

w( j , k ) = w( j , k ) - ( RATE * dw )

next k

next j

for i = loi to hii

for j = loj to hij

dw = dx( j ) * in( i )

w( i , j ) = w( i , j ) - ( RATE * dw )

next j

next i

for k = lok to hik

dw = -dx( k )

wt( k ) = wt( k ) - ( RATE * dw )

next k

for j = loj to hij

dw = -dx( j )

wt( j ) = wt( j ) - ( RATE * dw )

next j

end sub

''Ways of using the Network:

sub learn CEILING

for m = 1 to CEILING

call newIO

'' new I/O pair

'' put I into I[i]

'' put O into O[k]

call forwardpass

call backpropagate

next m

end sub

sub exploit

for m = 1 to 30

call newIO

call forwardpass

next m

end sub

'' input x

'' output y

'' adjust difference between y and f(x)

function f( x )

'' f = sqr( x )

f = sin( x )

'' f = sin( x ) + sin( 2 * x ) + sin( 5 * x ) + cos( x )

end function

'' O = f(x) normalised to range 0 to 1

function normalise( t )

return ( t - lofc ) / ( hifc - lofc )

end function

function expand( t ) '' goes the other way

return lofc + t * ( hifc - lofc )

end function

sub newIO

x = range( lox , hix )

''there is only one, just don't want to remember number:

for i = loi to hii

in( i ) = x

next i

'' there is only one, just don't want to remember number:

for k = lok to hik

O( k ) = normalise( f( x ) )

next k

end sub

'' Note it never even sees the same exemplar twice!

sub reportIO

for i = loi to hii

xx = in( i )

next i

for k = lok to hik

yy = expand( y( k ) )

next k

print "x = ", xx

print "y = ", yy

print "f(x) = ", f( xx )

end sub

|

|

|

|

Post by bluatigro on Jul 8, 2018 5:47:30 GMT

function range( l , h )

range = rnd( 0 ) * ( h - l ) + l

end function

|

|

|

|

Post by tenochtitlanuk on Jul 8, 2018 9:32:27 GMT

You had a few errors- LB/JB has no 'unary minus' so must always use '0 -x' format. 'signoid' and 'sigmoid' , and a couple of functions were entered in non-JB format.

Now runs until it hits an underflow error. Haven't investigated why because I haven't time to study what is happening...

Shown below with remmed row of <<<<<<<<<<<<<<<<<<<<<<<

'' http://computing.dcu.ie/~humphrys/Notes/Neural/Code/index.html

'' input I[i] = any real numbers ("doubles" in C++)

'' y[j]

'' network output y[k] = sigmoid continuous 0 to 1

'' correct output O[k] = continuous 0 to 1

'' assumes throughout that all i are linked to all j, and that all j are linked to all k

'' if want some NOT to be connected, will need to introduce:

'' Boolean connected [ TOTAL ] [ TOTAL ];

'' initialise it, and then keep checking:

'' if (connected[i][j])

'' don't really need to do this,

'' since we can LEARN a weight of 0 on this link

''test data

global NOINPUT : NOINPUT = 1

global NOHIDDEN : NOHIDDEN = 30

GLOBAL NOOUTPUT : NOOUTPUT = 1

'' I = x = double lox to hix

global lox : lox = 0

global hix : hix = 9

'' want it to store f(x) = double lof to hif

global lofc : lofc = -2.5 '' approximate bounds

global hifc : hifc = 3.2

GLOBAL RATE : RATE = 0.3

GLOBAL C : C = 0.1 '' start w's in range -C, C

GLOBAL TOTAL : TOTAL = NOINPUT + NOHIDDEN + NOOUTPUT

'' units all unique ids - so no ambiguity about which we refer to:

global loi : loi = 0

global hii : hii = NOINPUT - 1

global loj : loj = NOINPUT

global hij : hij = NOINPUT + NOHIDDEN - 1

global lok : lok = NOINPUT + NOHIDDEN

global hik : hik = NOINPUT + NOHIDDEN + NOOUTPUT - 1

'' input I[i] = any real numbers ("doubles" in C++)

'' y[j]

'' network output y[k] = sigmoid continuous 0 to 1

'' correct output O[k] = continuous 0 to 1

'' ssumes throughout that all i are linked to all j, and that all j are linked to all k

'' if want some NOT to be connected, will need to introduce:

'' Boolean connected [ TOTAL ] [ TOTAL ];

'' initialise it, and then keep checking:

'' if (connected[i][j])

'' don't really need to do this,

'' since we can LEARN a weight of 0 on this lin

dim in( TOTAL )

dim y( TOTAL )

dim O( TOTAL )

dim w( TOTAL , TOTAL ) '' w[i][j]

dim wt( TOTAL ) '' bias weights wt[i]

dim dx( TOTAL ) '' dE/dx[i]

dim dy( TOTAL ) '' dE/dy[i]

call init

call learn 1000

call exploit

print "[ game over ]"

end

''How Input is passed forward through the network:

function sigmoid( x ) ' program had 'signoid' in places <<<<<<<<<<<<<<<<<<

sigmoid = 1 / ( 1 + exp( 0 - x ) )

end function

sub forwardpass

''----- forwardpass I[i] -> y[j] ------------------------------------------------

for j = loj to hij

x = 0

for i = loi to hii

x = x + in( i ) * w( i , j )

next i

y( j ) = sigmoid( x - wt( j ) )

next j

''----- forwardpass y[j] -> y[k] ------------------------------------------------

for k = lok to hik

x = 0

for j = loj to hij

x = x + ( y( j ) * w( j , k ) )

y( k ) = sigmoid( x - wt( k ) )

next j

next k

end sub

''Initialisation:

'' going to do w++ and w--

'' so might think should start with all w=0

'' (all y[k]=0.5 - halfway between possibles 0 and 1)

'' in fact, if all w same they tend to march in step together

'' need *asymmetry* if want them to specialise (form a representation scheme)

'' best to start with diverse w

''

'' also, large positive or negative w -> slow learning

'' so start with small absolute w -> fast learning

function range( l , h )

range = rnd( 1 ) * ( h - l ) + l

end function

sub init

for i = loi to hii

for j = loj to hij

v= range( 0 -C , C ) ' <<<<<<<<<<<<<<<<<<<<<<<<

w( i , j ) =v

next j

next i

for j = loj to hij

for k = lok to hik

w( j , k ) = range( 0 -C , C ) ' <<<<<<<<<<<<<<<<<<<<<<<<

next k

next j

for j = loj to hij

wt( j ) = range( 0 -C , C ) ' <<<<<<<<<<<<<<<<<<<<<<<<

next j

for k = lok to hik

wt( k ) = range( 0 -C , C ) ' <<<<<<<<<<<<<<<<<<<<<<<<

next k

end sub

''How Error is back-propagated through the network:

sub backpropagate

''----- backpropagate O[k] -> dy[k] -> dx[k] -> w[j][k],wt[k] ---------------------------------

for k = lok to hik

dy( k ) = y( k ) - O( k )

dx( k ) = ( dy( k ) ) * y( k ) * ( 1 - y( k ) )

next k

''----- backpropagate dx[k],w[j][k] -> dy[j] -> dx[j] -> w[i][j],wt[j] ------------------------

''----- use OLD w values here (that's what the equations refer to) .. -------------------------

for j = loj to hij

t = 0

for k = lok to hik

t = t + ( dx( k ) * w( j , k ) )

next k

dy( j ) = t

dx( j ) = dy( j ) * y( j ) * ( 1 - y( j ) )

next j

''----- .. do all w changes together at end ---------------------------------------------------

for j = loj to hij

for k = lok to hik

dw = dx( k ) * y( j )

w( j , k ) = w( j , k ) - ( RATE * dw )

next k

next j

for i = loi to hii

for j = loj to hij

dw = dx( j ) * in( i )

w( i , j ) = w( i , j ) - ( RATE * dw )

next j

next i

for k = lok to hik

dw = -dx( k )

wt( k ) = wt( k ) - ( RATE * dw )

next k

for j = loj to hij

dw = -dx( j )

wt( j ) = wt( j ) - ( RATE * dw )

next j

end sub

''Ways of using the Network:

sub learn CEILING

for m = 1 to CEILING

call newIO

'' new I/O pair

'' put I into I[i]

'' put O into O[k]

call forwardpass

call backpropagate

next m

end sub

sub exploit

for m = 1 to 30

call newIO

call forwardpass

next m

end sub

'' input x

'' output y

'' adjust difference between y and f(x)

function f( x )

'' f = sqr( x )

f = sin( x )

'' f = sin( x ) + sin( 2 * x ) + sin( 5 * x ) + cos( x )

end function

'' O = f(x) normalised to range 0 to 1

function normalise( t )

normalise = ( t - lofc ) / ( hifc - lofc ) ' <<<<<<<<<<<<<<<<<<<<<<<

end function

function expand( t ) '' goes the other way

expand = lofc + t * ( hifc - lofc ) ' <<<<<<<<<<<<<<<<<<<<<<<

end function

sub newIO

x = range( lox , hix )

''there is only one, just don't want to remember number:

for i = loi to hii

in( i ) = x

next i

'' there is only one, just don't want to remember number:

for k = lok to hik

O( k ) = normalise( f( x ) )

next k

end sub

'' Note it never even sees the same exemplar twice!

sub reportIO

for i = loi to hii

xx = in( i )

next i

for k = lok to hik

yy = expand( y( k ) )

next k

print "x = ", xx

print "y = ", yy

print "f(x) = ", f( xx )

end sub

|

|

|

|

Post by bluatigro on Sept 22, 2018 9:00:36 GMT

update :

translated a python ANN

REM :

i dont know how to do tanh()

so this is not tested !!

'' bluatigro 20 sept 2018

'' ann try 3

'' based on : http://code.activestate.com/recipes

'' /578148-simple-back-propagation-neural-network-in-python-s/

global ni , nh , no

ni = 2

nh = 2

no = 1

dim ai( ni ) , in( ni - 1 ) , ah( nh - 1 )

dim ao( no - 1 ) , wish( no - 1 )

dim wi( ni - 1 , nh - 1 )

dim wo( nh - 1 , no - 1 )

dim ci( ni - 1 , nh - 1 )

dim co( nh - 1 , no - 1 )

global paterns

paterns = 4

dim p( ni - 1 , paterns - 1 )

dim uit( no - 1 , paterns - 1 )

call init

''init inpout and output paterns

for p = 0 to paterns - 1

read a , b

p( 0 , p ) = a

p( 1 , p ) = b

uit( 0 , p ) = a xor b

next p

data 0,0 , 0,1 , 1,0 , 1,1

''let NN live and learn

for e = 0 to 1000

''for eatch patern

fout = 0

for p = 0 to paterns - 1

''fill input cel's

for i = 0 to ni - 1

ai( i ) = p( i , p )

next i

for o = 0 to no - 1

wish( o ) = uit( o , p )

next o

call calc

fout = fout + backprop( .5 , .5 )

next p

print e , p

next e

end

function range( l , h )

range = rnd(0) * ( h - l ) + l

end function

sub init

''init neural net

for i = 0 to ni

ai( i ) = 1

next i

for i = 0 to nh - 1

ah( i ) = 1

next i

for i = 0 to no - 1

ao( i ) = 1

next i

for i = 0 to ni - 1

for h = 0 to nh - 1

wi( i , h ) = range( -.2 , .2 )

next h

next i

for h = 0 to nh - 1

for o = 0 to no - 1

wo( h , o ) = range( -2 , 2 )

next o

next h

end sub

sub calc

''forwart pass of neural net

for i = 0 to ni

ai( i ) = in( i )

next i

for h = 0 to nh - 1

sum = 0

for i = 0 to ni - 1

sum = sum + ai( i ) * wi( i , h )

next i

ah( h ) = signoid( sum / ni )

next h

for o = 0 to no

sum = 0

for h = 0 to nh - 1

sum = sum + ah( h ) * wo( h , o )

next h

ao( o ) = signoid( sum / nh )

next o

end sub

function tanh( x )

'' who knows this ?

end function

function signoid( x )

signoid = tanh( x )

end function

function dsignoid( x )

dsignoid = 1 - x ^ 2

end function

function backprop( n , m )

''learing

for i = 0 to no

od( i ) = 0

next i

for k = 0 to no

fout = wish( k ) - ao( k )

od( k ) = fout * dsinoid( ao( k ) )

next k

for j = 0 to nh

for k = 0 to no

c = od( k ) * ah( j )

wo( j , k ) = wo( j , k ) + n * c + m * co( j , k )

co( j , k ) = c

next k

next j

for i = 0 to ni

for j = 0 to nh

c = hd( j ) * ai( i )

wi( i , j ) = wi( i , j ) + n * c + m * ci( i , j )

ci( i , j ) = c

next j

next i

fout = 0

for k = 0 to no

fout = fout _

+ ( wish( k ) - ao( k ) ) ^ 2

next k

backprop = fout

end sub

|

|

|

|

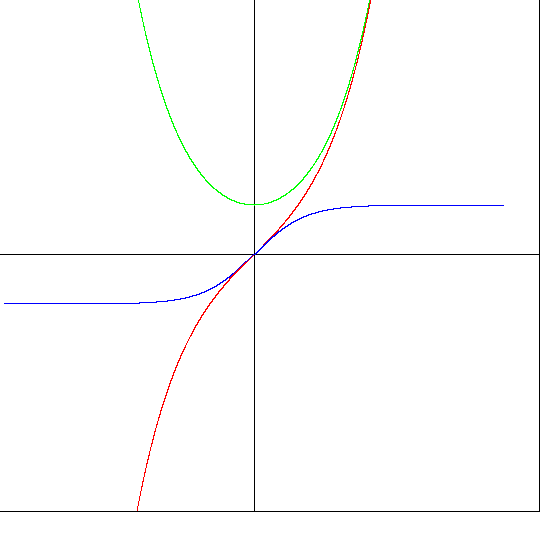

Post by tenochtitlanuk on Sept 23, 2018 8:53:52 GMT

Here are the three hyperbolic functions in LB. Seem OK- the graph agrees with wikipedia..

nomainwin

WindowWidth =550

WindowHeight =550

open "Demo of hyperbolic functions" for graphics_nsb as #gw

#gw "trapclose quit"

#gw "goto 255 0 ; down ; goto 255 550 ; up"

#gw "goto 0 255 ; down ; goto 550 255"

for x =-5 to 5 step 0.001

xScreen =255 +x *50

yScreen =255 -int( 50 *sinh( x))

#gw "color red ; set "; xScreen; " "; yScreen

yScreen =255 -int( 50 *cosh( x))

#gw "color green ; set "; xScreen; " "; yScreen

yScreen =255 -int( 50 *tanh( x))

#gw "color blue ; set "; xScreen; " "; yScreen

next x

#gw "getbmp scr 1 1 550 550"

bmpsave "scr", "hyperbolics.bmp"

wait

end

sub quit h$

close #gw

end

end sub

function sinh( x)

sinh =( 1 -exp( 0 -2 *x)) /( 2 *exp( 0 -x))

end function

function cosh( x)

cosh =( 1 +exp( 0 -2 *x)) /( 2 * exp( 0 -x))

end function

function tanh( x)

tanh =( 1 -exp( 0 -2 *x)) /( 1 + exp( 0 -2 *x))

end function

|

|

|

|

Post by bluatigro on Sept 24, 2018 5:51:16 GMT

thanks for help

update :

build a tanh()

added REM's

added some forgotten code

error :

it does not learn

did i make a typo ?

'' bluatigro 20 sept 2018

'' ann try 3

'' based on :

''http://code.activestate.com/recipes/578148-simple-back-propagation-neural-network-in-python-s/

global ni , nh , no

ni = 2

nh = 2

no = 1

dim ai( ni ) , in( ni ) , ah( nh - 1 )

dim ao( no - 1 ) , wish( no - 1 )

dim wi( ni , nh - 1 )

dim wo( nh - 1 , no - 1 )

dim ci( ni , nh - 1 )

dim co( nh - 1 , no - 1 )

dim od( nh ) , hd( nh )

global paterns

paterns = 4

dim p( ni - 1 , paterns - 1 )

dim uit( no - 1 , paterns - 1 )

call init

''init inpout and output paterns

for p = 0 to paterns - 1

read a , b

p( 0 , p ) = a

p( 1 , p ) = b

uit( 0 , p ) = a xor b

next p

data 0,0 , 0,1 , 1,0 , 1,1

''let NN live and learn

for e = 0 to 1000

''for eatch patern

fout = 0

for p = 0 to paterns - 1

''fill input cel's

for i = 0 to ni - 1

ai( i ) = p( i , p )

next i

''fil target

for o = 0 to no - 1

wish( o ) = uit( o , p )

next o

call calc

fout = fout + backprop( .5 , .5 )

next p

print e , fout

next e

end

function range( l , h )

range = rnd(0) * ( h - l ) + l

end function

sub init

''init neural net

for i = 0 to ni

ai( i ) = 1

next i

for i = 0 to nh - 1

ah( i ) = 1

next i

for i = 0 to no - 1

ao( i ) = 1

next i

for i = 0 to ni

for h = 0 to nh - 1

wi( i , h ) = range( -1 , 1 )

next h

next i

for h = 0 to nh - 1

for o = 0 to no - 1

wo( h , o ) = range( -1 , 1 )

next o

next h

end sub

sub calc

''forwart pass of neural net

for i = 0 to ni

ai( i ) = in( i )

next i

for h = 0 to nh - 1

sum = 0

for i = 0 to ni

sum = sum + ai( i ) * wi( i , h )

next i

ah( h ) = signoid( sum / ni )

next h

for o = 0 to no - 1

sum = 0

for h = 0 to nh - 1

sum = sum + ah( h ) * wo( h , o )

next h

ao( o ) = signoid( sum / nh )

next o

end sub

function tanh( x )

tanh = ( 1 -exp( -2 * x ) ) _

/ ( 1 + exp( -2 *x ) )

end function

function signoid( x )

signoid = tanh( x )

end function

function dsignoid( x )

dsignoid = 1 - x ^ 2

end function

function backprop( n , m )

'' http://www.youtube.com/watch?v=aVId8KMsdUU&feature=BFa&list=LLldMCkmXl4j9_v0HeKdNcRA

'' calc output deltas

'' we want to find the instantaneous rate of change of ( error with respect to weight from node j to node k)

'' output_delta is defined as an attribute of each ouput node. It is not the final rate we need.

'' To get the final rate we must multiply the delta by the activation of the hidden layer node in question.

'' This multiplication is done according to the chain rule as we are taking the derivative of the activation function

'' of the ouput node.

'' dE/dw[j][k] = (t[k] - ao[k]) * s'( SUM( w[j][k]*ah[j] ) ) * ah[j]

for k = 0 to no - 1

fout = wish( k ) - ao( k )

od( k ) = fout * dsinoid( ao( k ) )

next k

'' update output weights

for j = 0 to nh - 1

for k = 0 to no - 1

'' output_deltas[k] * self.ah[j]

'' is the full derivative of

'' dError/dweight[j][k]

c = od( k ) * ah( j )

wo( j , k ) = wo( j , k ) + n * c + m * co( j , k )

co( j , k ) = c

next k

next j

'' calc hidden deltas

for j = 0 to nh - 1

fout = 0

for k = 0 to no - 1

fout = fout + od( k ) * wo( j , k )

next k

hd( j ) = fout * dsignoid( ah( j ) )

next j

'' update input weights

for i = 0 to ni

for j = 0 to nh - 1

c = hd( j ) * ai( i )

wi( i , j ) = wi( i , j ) + n * c + m * ci( i , j )

ci( i , j ) = c

next j

next i

fout = 0

for k = 0 to no - 1

fout = fout _

+ ( wish( k ) - ao( k ) ) ^ 2

next k

backprop = fout / 2

end function

|

|

|

|

Post by tenochtitlanuk on Sept 24, 2018 14:10:21 GMT

You've a 'dsinoid' where you intend 'dsignoid'in one place.

I'd add a 'scan' to the main loop so you can break out of it.

Doesn't work yet though. Keep at it- I' like to see it running!

|

|

|

|

Post by bluatigro on Sept 30, 2018 8:37:03 GMT

update :

EUREKA !!!

IT IS WORKING NOW !!!

? :

how about any number of hidden layer's

'' bluatigro 20 sept 2018

'' ann try 3

'' based on :

''http://code.activestate.com/recipes/578148-simple-back-propagation-neural-network-in-python-s/

global ni , nh , no

ni = 2

nh = 2

no = 1

dim ai( ni ) , ah( nh - 1 )

dim ao( no - 1 ) , wish( no - 1 )

dim wi( ni , nh - 1 )

dim wo( nh - 1 , no - 1 )

dim ci( ni , nh - 1 )

dim co( nh - 1 , no - 1 )

dim od( nh ) , hd( nh )

global paterns

paterns = 4

dim p( ni , paterns )

dim uit( no , paterns )

call init

''init inpout and output paterns

for p = 0 to paterns - 1

read a , b

p( 0 , p ) = a

p( 1 , p ) = b

uit( 0 , p ) = a xor b

next p

data 0,0 , 0,1 , 1,0 , 1,1

''let NN live and learn

for e = 0 to 10000

''for eatch patern

fout = 0

for p = 0 to paterns - 1

''fill input cel's

for i = 0 to ni - 1

ai( i ) = p( i , p )

next i

''fil target

for o = 0 to no - 1

wish( o ) = uit( o , p )

next o

call calc p

fout = fout + backprop( .5 , .5 )

next p

if e mod 100 = 0 then print e , fout

next e

restore

print "a | b | a xor b" , "nn"

for i = 0 to paterns - 1

read a , b

p( 0 , i ) = a

p( 1 , i ) = b

call calc i

print a ; " | " ; b ; " | " ; a xor b _

, " " ; ao( 0 )

next i

print "[ game over ]"

end

function range( l , h )

range = rnd(0) * ( h - l ) + l

end function

sub init

''init neural net

for i = 0 to ni

ai( i ) = 1

next i

for i = 0 to nh - 1

ah( i ) = 1

next i

for i = 0 to no - 1

ao( i ) = 1

next i

for i = 0 to ni

for h = 0 to nh - 1

wi( i , h ) = range( -1 , 1 )

next h

next i

for h = 0 to nh - 1

for o = 0 to no - 1

wo( h , o ) = range( -1 , 1 )

next o

next h

end sub

sub calc p

''forwart pass of neural net

for i = 0 to ni - 1

ai( i ) = p( i , p )

next i

for h = 0 to nh - 1

sum = 0

for i = 0 to ni

sum = sum + ai( i ) * wi( i , h )

next i

ah( h ) = signoid( sum / ni )

next h

for o = 0 to no - 1

sum = 0

for h = 0 to nh - 1

sum = sum + ah( h ) * wo( h , o )

next h

ao( o ) = signoid( sum / nh )

next o

end sub

function tanh( x )

tanh = ( 1 - exp( -2 * x ) ) _

/ ( 1 + exp( -2 *x ) )

end function

function signoid( x )

signoid = tanh( x )

end function

function dsignoid( x )

dsignoid = 1 - x ^ 2

end function

function backprop( n , m )

'' http://www.youtube.com/watch?v=aVId8KMsdUU&feature=BFa&list=LLldMCkmXl4j9_v0HeKdNcRA

'' calc output deltas

'' we want to find the instantaneous rate of change of ( error with respect to weight from node j to node k)

'' output_delta is defined as an attribute of each ouput node. It is not the final rate we need.

'' To get the final rate we must multiply the delta by the activation of the hidden layer node in question.

'' This multiplication is done according to the chain rule as we are taking the derivative of the activation function

'' of the ouput node.

'' dE/dw[j][k] = (t[k] - ao[k]) * s'( SUM( w[j][k]*ah[j] ) ) * ah[j]

for k = 0 to no - 1

fout = wish( k ) - ao( k )

od( k ) = fout * dsignoid( ao( k ) )

next k

'' update output weights

for j = 0 to nh - 1

for k = 0 to no - 1

'' output_deltas[k] * self.ah[j]

'' is the full derivative of

'' dError/dweight[j][k]

c = od( k ) * ah( j )

wo( j , k ) = wo( j , k ) + n * c + m * co( j , k )

co( j , k ) = c

next k

next j

'' calc hidden deltas

for j = 0 to nh - 1

fout = 0

for k = 0 to no - 1

fout = fout + od( k ) * wo( j , k )

next k

hd( j ) = fout * dsignoid( ah( j ) )

next j

'' update input weights

for i = 0 to ni

for j = 0 to nh - 1

c = hd( j ) * ai( i )

wi( i , j ) = wi( i , j ) + n * c + m * ci( i , j )

ci( i , j ) = c

next j

next i

fout = 0

for k = 0 to no - 1

fout = fout _

+ ( wish( k ) - ao( k ) ) ^ 2

next k

backprop = fout / 2

end function

|

|

|

|

Post by bluatigro on Sept 30, 2018 9:53:50 GMT

update :

try at any number of hidden layer's

error :

underflowexception

'' bluatigro 20 sept 2018

'' ann try 3

'' based on :

''http://code.activestate.com/recipes/578148-simple-back-propagation-neural-network-in-python-s/

global ni , nh , nl , no

ni = 2

nh = 2

nl = 2

no = 1

dim ai( ni ) , ah( nl , nh )

dim ao( no ) , wish( no )

dim wi( ni , nh )

dim wh( in( nl , nh , nh ) )

dim wo( nh , no )

dim ci( ni , nh )

dim co( nh , no )

dim ch( in( nl , nh , nh ) )

dim od( nh ) , hd( nh )

global paterns

paterns = 4

dim p( ni , paterns )

dim uit( no , paterns )

call init

''init inpout and output paterns

for p = 0 to paterns - 1

read a , b

p( 0 , p ) = a

p( 1 , p ) = b

uit( 0 , p ) = a xor b

next p

data 0,0 , 0,1 , 1,0 , 1,1

''let NN live and learn

for e = 0 to 10000

''for eatch patern

fout = 0

for p = 0 to paterns - 1

''fill input cel's

for i = 0 to ni - 1

ai( i ) = p( i , p )

next i

''fil target

for o = 0 to no - 1

wish( o ) = uit( o , p )

next o

call calc p

fout = fout + backprop( .5 , .5 )

next p

if e mod 100 = 0 then print e , fout

next e

restore

print "a | b | a xor b" , "nn"

for i = 0 to paterns - 1

read a , b

p( 0 , i ) = a

p( 1 , i ) = b

call calc i

print a ; " | " ; b ; " | " ; a xor b _

, " " ; ao( 0 )

next i

print "[ game over ]"

end

function in( l , i , c )

in = l * nh * nh + i * nh + c

end function

function range( l , h )

range = rnd(0) * ( h - l ) + l

end function

sub init

''init neural net

for i = 0 to ni

ai( i ) = 1

next i

for l = 0 to nl - 1

for h = 0 to nh

ah( l , h ) = 1

next h

next l

for i = 0 to no - 1

ao( i ) = 1

next i

for i = 0 to ni

for h = 0 to nh - 1

wi( i , h ) = range( -1 , 1 )

next h

next i

for l = 0 to nl - 1

for hx = 0 to nh

for hy = 0 to nh

wh( in( l , hx , hy ) ) = range( -1 , 1 )

next hy

next hx

next l

for h = 0 to nh - 1

for o = 0 to no - 1

wo( h , o ) = range( -1 , 1 )

next o

next h

end sub

sub calc p

''forwart pass of neural net

for i = 0 to ni - 1

ai( i ) = p( i , p )

next i

for h = 0 to nh - 1

sum = 0

for i = 0 to ni

sum = sum + ai( i ) * wi( i , h )

next i

ah( h , 0 ) = signoid( sum / ni )

next h

for l = 1 to nl

for hx = 0 to nh

sum = 0

for hy = 0 to nh

sum = sum + ah( l - 1 , hy ) _

* wh( in( l - 1 , hx , hy ) )

next hy

ah( l , hx ) = signoid( sum / nh )

next hx

next l

for o = 0 to no - 1

sum = 0

for h = 0 to nh - 1

sum = sum + ah( nl , h ) * wo( h , o )

next h

ao( o ) = signoid( sum / nh )

next o

end sub

function tanh( x )

tanh = ( 1 - exp( -2 * x ) ) _

/ ( 1 + exp( -2 *x ) )

end function

function signoid( x )

signoid = tanh( x )

end function

function dsignoid( x )

dsignoid = 1 - x ^ 2

end function

function backprop( n , m )

'' http://www.youtube.com/watch?v=aVId8KMsdUU&feature=BFa&list=LLldMCkmXl4j9_v0HeKdNcRA

'' calc output deltas

'' we want to find the instantaneous rate of change of ( error with respect to weight from node j to node k)

'' output_delta is defined as an attribute of each ouput node. It is not the final rate we need.

'' To get the final rate we must multiply the delta by the activation of the hidden layer node in question.

'' This multiplication is done according to the chain rule as we are taking the derivative of the activation function

'' of the ouput node.

'' dE/dw[j][k] = (t[k] - ao[k]) * s'( SUM( w[j][k]*ah[j] ) ) * ah[j]

for k = 0 to no - 1

fout = wish( k ) - ao( k )

od( k ) = fout * dsignoid( ao( k ) )

next k

'' update output weights

for j = 0 to nh - 1

for k = 0 to no - 1

'' output_deltas[k] * self.ah[j]

'' is the full derivative of

'' dError/dweight[j][k]

c = od( k ) * ah( nh , j )

wo( j , k ) = wo( j , k ) + n * c + m * co( j , k )

co( j , k ) = c

next k

next j

'' calc hidden delta's hidden layer's

for l = nl to 1 step -1

for j = 0 to nh

fout = 0

for k = 0 to nh

fout = fout + od( k ) _

* wh( in( l , j , k ) )

next k

hd( j ) = fout _

* dsignoid( ah( l , j ) )

next j

'' update hidden layer's weight's

for i = 0 to nh

for j = 0 to nh

c = hd( j ) * ah( l - 1 , i )

wh( in( l , i , j ) ) _

= wh( in( l , i , j ) ) _

+ n * c + m _

* ch( in( l - 1 , i , j ) )

ch( in( l - 1 , i , j ) ) = c

next j

next i

next l

'' calc hidden deltas input layer

for j = 0 to nh - 1

fout = 0

for k = 0 to no - 1

fout = fout + od( k ) * wo( j , k )

next k

hd( j ) = fout * dsignoid( ah( 0 , j ) )

next j

'' update input weights

for i = 0 to ni

for j = 0 to nh - 1

c = hd( j ) * ai( i )

wi( i , j ) = wi( i , j ) _

+ n * c + m * ci( i , j )

ci( i , j ) = c

next j

next i

fout = 0

for k = 0 to no - 1

fout = fout _

+ ( wish( k ) - ao( k ) ) ^ 2

next k

backprop = fout / 2

end function

|

|

|

|

Post by bluatigro on Oct 15, 2018 7:05:11 GMT

update :

try at a game AI controled by a NN

not 3 :

put your X so that the computer puts

the 3e in a row or colom [ NOT diagonal ]

'' bluatigro 14 okt 2018

'' not3 NN init

'' based on :

''http://code.activestate.com/recipes/578148-simple-back-propagation-neural-network-in-python-s/

global ni , nh , no

ni = 9

nh = 81

no = 8

dim ai( ni ) , ah( nh )

dim ao( no ) , wish( no )

dim wi( ni , nh )

dim wo( nh , no )

dim ci( ni , nh )

dim co( nh , no )

dim od( nh ) , hd( nh )

call init

call save

print "[ not3 NN init ready ]"

end

sub save

open "not3-NN.txt" for output as #uit

print #uit , game.tel

for i = 0 to ni

for h = 0 to nh

print #uit , wi( i , h )

print #uit , ci( i , h )

next h

next i

for h = 0 to nh

for i = 0 to ni

print #uit , wo( h , o )

print #uit , co( h , o )

next i

next h

close #uit

input "[ init not3 NN txt ready ]" ; in$

end sub

function range( l , h )

range = rnd(0) * ( h - l ) + l

end function

sub init

''init neural net

for i = 0 to ni

ai( i ) = 1

next i

for i = 0 to nh - 1

ah( i ) = 1

next i

for i = 0 to no - 1

ao( i ) = 1

next i

for i = 0 to ni

for h = 0 to nh - 1

wi( i , h ) = range( -1 , 1 )

next h

next i

for h = 0 to nh - 1

for o = 0 to no - 1

wo( h , o ) = range( -1 , 1 )

next o

next h

end sub

'' bluatigro 14 okt 2018

'' not3 NN learn

'' based on :

''http://code.activestate.com/recipes/578148-simple-back-propagation-neural-network-in-python-s/

global ni , nh , no

ni = 9

nh = 81

no = 8

dim ai( ni ) , ah( nh )

dim ao( no ) , wish( no )

dim wi( ni , nh )

dim wo( nh , no )

dim ci( ni , nh )

dim co( nh , no )

dim od( nh ) , hd( nh )

dim bord( 9 ) , zet( 9 )

global move.tel , bord.moves , game.tel

call load

for epog = 0 to 1000

bord.moves = 0

move.tel = 0

fout = 0

while not( anyrow() )

call store.move rando.move()

wend

for i = 0 to 8

wish( i ) = 0

next i

for m = 0 to move.tel

if move.tel and 1 then

if m and 1 then

wish( zet( m ) ) = -1

else

wish( zet( m ) ) = 1

end if

else

if m and 1 then

wish( zet( m ) ) = 1

else

wish( zet( m ) ) = -1

end if

end if

fout = fout + backprop( .5 , .5 )

next m

print game.tel , fout

game.tel = game.tel + 1

next epog

call save

end

function anyrow()

anyrow = row( 0 , 1 , 2 ) _

or row( 3 , 4 , 5 ) _

or row( 6 , 7 , 8 ) _

or row( 0 , 3 , 6 ) _

or row( 1 , 4 , 7 ) _

or row( 2 , 5 , 8 )

end function

function row( a , b , c )

nu = bord.moves

row = bit( nu , a ) and bit( nu , b ) and bit( nu , c )

end function

sub store.move z

zet( move.tel ) = z

bord.moves = bord.moves or z

bord( move.tel ) = bord.moves

move.tel = move.tel + 1

end sub

sub load

open "not3-NN.txt" for input as #in

get #in , game.tel

for i = 0 to ni

for h = 0 to nh

get #in , a

get #in , b

wi( i , h ) = a

ci( i , h ) = b

next h

next i

for h = 0 to nh

for i = 0 to ni

get #in , a

get #in , b

wo( h , o ) = a

co( h , o ) = b

next i

next h

close #in

input "[ load not3 NN memory ready . ]" ; in$

end sub

sub save

open "not3-NN.txt" for output as #uit

print #uit , game.tel

for i = 0 to ni

for h = 0 to nh

print #uit , wi( i , h )

print #uit , ci( i , h )

next h

next i

for h = 0 to nh

for i = 0 to ni

print #uit , wo( h , o )

print #uit , co( h , o )

next i

next h

close #uit

input "[ save not3 NN memory ready ]" ; in$

end sub

function rando.move()

dice = int( rnd(0) * 9 )

while bord.moves and 2 ^ dice

dice = int( rnd(0) * 9 )

wend

rando.move = dice

end function

function range( l , h )

range = rnd(0) * ( h - l ) + l

end function

sub init

''init neural net

for i = 0 to ni

ai( i ) = 1

next i

for i = 0 to nh - 1

ah( i ) = 1

next i

for i = 0 to no - 1

ao( i ) = 1

next i

for i = 0 to ni

for h = 0 to nh - 1

wi( i , h ) = range( -1 , 1 )

next h

next i

for h = 0 to nh - 1

for o = 0 to no - 1

wo( h , o ) = range( -1 , 1 )

next o

next h

end sub

function bit( p , i )

bit = ( p and 2 ^ i ) / ( 2 ^ i )

end function

sub calc patern

''forwart pass of neural net

for i = 0 to ni - 1

ai( i ) = bit( patern , i )

next i

for h = 0 to nh - 1

sum = 0

for i = 0 to ni

sum = sum + ai( i ) * wi( i , h )

next i

ah( h ) = signoid( sum / ni )

next h

for o = 0 to no - 1

sum = 0

for h = 0 to nh - 1

sum = sum + ah( h ) * wo( h , o )

next h

ao( o ) = signoid( sum / nh )

next o

end sub

function tanh( x )

tanh = ( 1 - exp( -2 * x ) ) _

/ ( 1 + exp( -2 *x ) )

end function

function signoid( x )

signoid = tanh( x )

end function

function dsignoid( x )

dsignoid = 1 - x ^ 2

end function

function backprop( n , m )

'' http://www.youtube.com/watch?v=aVId8KMsdUU&feature=BFa&list=LLldMCkmXl4j9_v0HeKdNcRA

'' calc output deltas

'' we want to find the instantaneous rate of change of ( error with respect to weight from node j to node k)

'' output_delta is defined as an attribute of each ouput node. It is not the final rate we need.

'' To get the final rate we must multiply the delta by the activation of the hidden layer node in question.

'' This multiplication is done according to the chain rule as we are taking the derivative of the activation function

'' of the ouput node.

'' dE/dw[j][k] = (t[k] - ao[k]) * s'( SUM( w[j][k]*ah[j] ) ) * ah[j]

for k = 0 to no - 1

fout = wish( k ) - ao( k )

od( k ) = fout * dsignoid( ao( k ) )

next k

'' update output weights

for j = 0 to nh - 1

for k = 0 to no - 1

'' output_deltas[k] * self.ah[j]

'' is the full derivative of

'' dError/dweight[j][k]

c = od( k ) * ah( j )

wo( j , k ) = wo( j , k ) + n * c + m * co( j , k )

co( j , k ) = c

next k

next j

'' calc hidden deltas

for j = 0 to nh - 1

fout = 0

for k = 0 to no - 1

fout = fout + od( k ) * wo( j , k )

next k

hd( j ) = fout * dsignoid( ah( j ) )

next j

'' update input weights

for i = 0 to ni

for j = 0 to nh - 1

c = hd( j ) * ai( i )

wi( i , j ) = wi( i , j ) + n * c + m * ci( i , j )

ci( i , j ) = c

next j

next i

fout = 0

for k = 0 to no - 1

fout = fout _

+ ( wish( k ) - ao( k ) ) ^ 2

next k

backprop = fout / 2

end function

|

|

|

|

Post by bluatigro on May 7, 2019 10:08:52 GMT

update :

fount example in c++

tryed translation

error :

i got strange results

''translated from :

''https://www.codeproject.com/Articles/1237026/Simple-MLP-Backpropagation-Artificial-Neural-Netwo

global trainsize : trainsize = 20

dim trainset( trainsize , 1 )

global pi : pi = atn( 1 ) * 4

global epog : epog = 5000

global epsilon : epsilon = 0.05

global n : n = 5

dim c( n ) , w( n ) , v( n )

for i = 0 to trainsize

trainset( i , 0 ) = i * pi * 2 / trainsize

trainset( i , 1 ) = sin( i * pi * 2 / trainsize )

next i

''main

for i = 0 to n - 1

w( i ) = rnd(0) - rnd(0)

v( i ) = rnd(0) - rnd(0)

c( i ) = rnd(0) - rnd(0)

next i

for i = 0 to epog

for j = 0 to trainsize

call train trainset(j,0) , trainset(j,1)

next j

print i

next i

for i = 0 to trainsize

x = i * pi * 2 / trainsize

y1 = sin( i * pi * 2 / trainsize )

y2 = f.theta( i * pi * 2 / trainsize )

print x , y1 , y2

next i

end

function sigmoid( x )

sigmoid = 1 / ( 1 + exp( 0 - x ) )

end function

function f.theta( x )

uit = 0

for i = 0 to n - 1

uit = uit + v( i ) * sigmoid( c( i ) * w( i ) * x )

next i

f.theta = uit

end function

sub train x , y

for i = 0 to n - 1

w(i) = w(i) - epsilon * 2 * ( f_theta( x ) - y ) * v(i) * x _

* ( 1 - sigmoid( c(i) + w(i) * x ) ) * sigmoid( c(i) + w(i) * x )

next i

for i = 0 to n - 1

v(i) = v(i) - epsilon * 2 * ( f_theta( x ) - y ) * sigmoid( c(i) + w(i) * x )

next i

b = b - epsilon * 2 * ( f_theta( x ) - y )

for i = 0 to n - 1

c(i) = c(i) - epsilon * 2 * ( f_theta( x ) - y ) * v(i) _

* ( 1 - sigmoid( c(i) + w(i) * x ) ) * sigmoid( c(i) + w(i) * x )

next i

end sub

|

|